Big Data Analytics and XAI Group

We are a team of computer scientists with more than 15 years of experience in developing methods for data exploration and analysis for interdisciplinary research projects. We focus on two primary areas:

- Big Data Analytics

- Explainable Artificial Intelligence (XAI)

Our group specialises in exploratory data analysis that combines the computational power of data analytics with the human capacity for visual pattern recognition. This integration allows scientists to explore, analyse, and make sense of large volumes of data and ML model aspects interactively and effectively.

Our research is grounded in computer science, but we develop our methods in close cooperation with users of the methods. To develop our methods, we engage in a "dialogue" with scientists by conducting a user and task analysis.

Big Data Analytics

Big Data Analytics is an important tool in contemporary scientific research, enabling scientists to derive more value from the increasing volumes of scientific data through the use of advanced analytical techniques alongside traditional statistical insights.Our research is driven by practical scientific applications in fields such as remote sensing, fluid systems modelling, and Earth system modelling.

Our research directions are:

Find Relations among heterogeneous data

Geoscientists make use of data from sensors, geoarchives (such as core samples), and simulations to study the system Earth. The data describe Earth processes on various scales in space and time and by many different variables. Our methods facilitate the comparison of data from different sources and the integrated analysis of the heterogeneous data.

Research & Technologies:

- Comparison of ocean model output with reference data

- SLIVisu – Visual analysis methods to validate simulation models

- Visual exploration of categorical data in lake sediment cores

- ENAP - Enhancing scientific environmental data by using volunteered images in social media

- Digital Earth - Scientific workflows for interdisciplinary data analysis

Extract relevant information from large data sets

Current simulation models as well as observation systems generate large quantities of data. Our methods extract the important information from these data and present it in a compact and efficient manner to scientists.

Research & Technologies:

Explainable Artificial Intelligence (XAI)

The adoption of ML in scientific research is frequently impeded by the opaque nature of many ML models and the complexity of their application. XAI methods help overcome these barriers by making the ML black box more transparent. Scientists can use the developed model with more confidence. We develop XAI methods to make ML more transparent for geoscientists. Our research directions are:

XAI for exploring different aspects of black box ML models.

Our main focus is to explain the inner workings of machine learning models through model-agnostic methods. By focusing on model-agnostic XAI techniques, we aim to provide insights into black box model, regardless of its underlying architecture.

Research & technologies:

HSExplorer allows geoscientists to test changes in input data and observe how these alterations affect model responses, providing insights into model behaviour under various scenarios.

XAI for exploring specific architectures of ML models.

![[Translate to English:] Icon](/fileadmin/_processed_/b/1/csm_method_specific_f5e74bea63.png)

Advanced ML models like Transformers, Autoencoders, and Reservoir Computing models are important for analysing complex spatial, temporal, and dynamic systems in geoscience. These ML models have unique architectural characteristics and learning processes. Developing model-oriented XAI techniques that are tailored to these specific properties can provide more relevant and insightful explanations.

Research & technologies:

- We are developing the Multi-Level Visual Attention Explorer (MVisAE), an explainability method that uses attention visualization to provide insights into larger Transformer-based models such as the 3D-ABC foundational model. This method is designed specifically for the Swin Transformer model, a widely-used method to serve as the foundation for many visual and multimodal foundational models.

XAI for ML model development transparency.

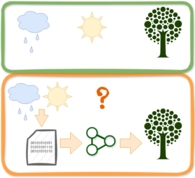

To use ML models in geoscience research, scientists must undertake several steps: cleaning and preparing data, selecting relevant features and architectures, training the model to find optimal hyperparameter configurations, and testing and evaluating the resulting model. The complexity of these stages often makes the ML pipeline opaque, requiring a high level of ML expertise that many domain specialists may lack. We aim to make this process more transparent to empower geoscientists gaining the confidence in applying ML methods in their research.

Research & technologies:

HPExplorer assists geoscientists in exploring the hyperparameter space by identifying stable regions where ML models show consistent performance, complemented by HP importance analysis to enhance understanding of each parameter's impact.

XAI for explaining causality.

Conventional ML models are often good at finding patterns and associations in data, but they struggle to capture the underlying causal relationships. Causality research in XAI is particularly important for geoscientists because it allows them to understand the underlying cause-and-effect relationships within their data.

ClarifAI Platform

ClarifAI integrates our research group's XAI methods into a cohesive platform. Its user-centric design philosophy enhances usability, simplifying the application of XAI methods for geoscientists without extensive expertise in ML. ClarifAI's strategic objective is to develop a comprehensive XAI pipeline that offers detailed, step-by-step guidance through the transparency challenges of the entire ML model lifecycle. This pipeline should equip geoscientists with the essential tools to employ ML methods more confidently in their research.